Navigating AI with Pestalozzi – Part 3: Liability

Key Takeaways

- In principle, AI-deploying companies are liable for AI output or actions generated by them just as if they had generated the output or acted without the use of AI. This means that AI-deploying companies are liable if they wilfully or negligently use AI tools so that it constitutes a breach of contract or tort, and if this AI use causes damage to others. Therefore, diligence is key when offering AI-powered services.

- In the future, AI providers and, depending on the level of the AI tool customization, also AI-deploying companies may face strict (i.e., non-fault based) liability regarding injured individuals for personal or property damage as well as damage arising from corrupted or destroyed data. Because most likely, over time, Switzerland will align its product liability legislation with pending new EU legislation. For the time being, providers are only exposed to such liability if they sell tangible products with AI embedded.

- Some typical liability risks for AI use cases can be mitigated in the agreement with the AI provider (e.g., excluding the provider's right to use or sell data generated through your use of the AI tool).

- Contractual limitation of liability with respect to customers is possible to the same extent that it would be allowed for any other means of contract fulfilment. Especially if limitation of liability clauses are contained in general terms and conditions, attention should be paid to their enforceability.

Existing Legal Framework on Liability – what Applied in an AI-free World also Applies to AI

In Switzerland, damage caused by AI tools can lead to civil liability based on breach of contract, tort, or product liability (a specific kind of tort liability based on the Product Liability Act).

Contractual and Tort Liability

Without specific legal provisions, liability arising from the use of AI is governed by the existing legal framework in Switzerland. In plain terms, this means that what is not allowed without the use of AI is also prohibited when using AI. The focus of the following chapter is on potential liability issues for companies that deploy AI ("AI-deploying companies") and generate output with the AI-embedded applications, or let their customers generate output with it. Thus, AI-deploying companies are civilly liable for damage caused to others by use of AI tools if this use somehow constitutes a breach of contract or tort. Examples for torts caused by AI are violations of data protection and intellectual property rights, or unfair competitive behavior.

With a few exceptions, both contractual and tort liability are attributed only if the AI-deploying company willfully or negligently causes the contract violation or tort. Therefore, not only the employees of AI-deploying companies but also customers will have to learn to interact responsibly with AI tools (e.g., with large languages models ("LLMs")). For example, if customers provoke problematic AI output through their own unlawful input (in case of LLMs, the entered "prompt" by the customer), the deploying company should not be liable for this output, at least not if the software embedded in the AI tool contains reasonable measures to prevent unlawful output.

Other potential sources of faulty AI output lie in the development stage of the AI tool. Examples are its programming, the choice of data sets that the AI is trained on, as well as the duration of its training (AI tools can be under- as well as overtrained). Most AI tools are "black boxes", meaning that beyond their original programming and training, humans do not know how the AI tools arrive at a particular output. This makes it difficult to anticipate their future behavior, and to prove a causal link between faulty output and a specific step during the development stage. In using such a black box mechanism, the AI-deploying company knowingly assumes a risk. Appropriate diligence is, thus, key in choosing the AI provider and the AI tool. Important aspects to consider are:

- the size of the training data sets: likely large and high-quality training sets are preferred;

- monitoring and update: choose an AI tool that is continually monitored and periodically updated by the provider.

In some cases, having an employee run AI generated output through an internet search engine to check for copyrighted texts or pictures or otherwise having an individual check AI generated output before it is released to third parties can further reduce generative AI related liability risks. Whether such steps are useful will depend on the AI tool's particular task. Hence, this may be less relevant for a chatbot, whose purpose is reducing the need for human attention.

Additional liability mitigation tips to consider when setting up your contracts with AI providers or your customers will follow below.

Product Liability

In some cases, AI tools are first installed on tangible products or machines that are then sold or leased to companies (e.g., waiter robots at restaurants). Under such circumstances, the manufacturer of the product itself (= the AI provider) is directly liable for personal or property damage caused by a faulty product, based on the Product Liability Act. Product liability also pertains to those who substantially alter the product, which might include some AI-deploying companies ("quasi-manufacturers"). Due to the complexity and autonomy of AI, however, it may turn out to be difficult for an injured individual to prove that the AI was actually faulty.

As of today, product liability is generally limited to tangible products. Yet, this year, the EU Parliament endorsed a revision of its Product Liability Directive, which extends product liability beyond tangible products to include software itself (thus including AI tools). Next to personal or property damage, it also covers damage arising from corrupted or destroyed data. In addition, the revised directive lowers the burden of proof for the injured individual to show defectiveness of the product or software and causality. Switzerland will most likely, and over time, adapt its Product Liability Act to reflect this revision. The revised EU directive still needs to be formally approved by the EU Council, and its provisions will, at the earliest, only take effect by the end of 2026. Considering this timeline, it may still take several years until product liability in Switzerland also covers faulty software as such.

Contractual Mitigation of Liability Risks in the Deployer–Provider Relationship

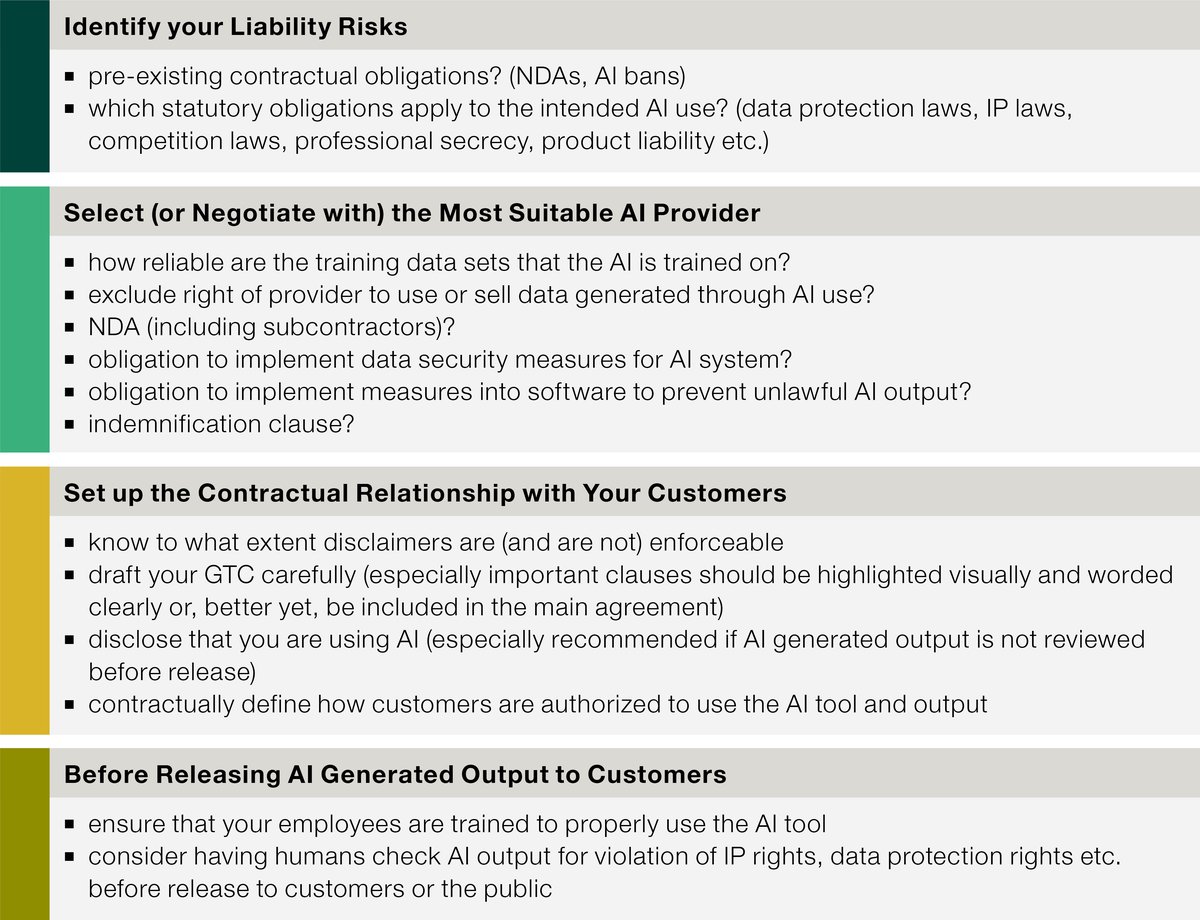

Before approaching potential AI providers, future AI-deploying companies should first identify their own pre-existing contractual and statutory restrictions that might expose them to liability if they launch an AI powered service. Some examples of potential restrictions are:

- the data the AI-deploying company wants the AI tool to process is covered by pre-existing non-disclosure agreements;

- the existing customer contracts completely prohibit the use of AI for specific parts of contract fulfilment;

- statutory data protection obligations, or

- professional secrecy laws;

Many of the liability risks thus identified can be addressed in the agreement with the AI provider. Most AI-deploying companies will want to restrict or exclude rights of the provider to use (e.g., as training data) or even to sell data generated through their use of the provided AI tool. To comprehensively secure the data, it might prove helpful to have also the provider (with its subcontractors) enter into a non-disclosure agreement and to oblige the provider to implement reasonable data security measures for its AI system. Another possibility to mitigate liability risks is through contractual indemnification clauses that oblige the AI provider to indemnify the deployer for third-party claims caused by its AI tool. As with insurance policies, however, attention should be paid to the extent of such an indemnification clause's coverage.

Contractual Mitigation of Liability Risks in the Deployer–Customer Relationship

A typical way to avoid liability is by contractually limiting the AI-deploying company's liability from the outset. Under general Swiss contract law, contractual liability (but not product liability) can be excluded for slight and medium negligence. It cannot be excluded for gross negligence or intent. It is admissible to exclude, however, any contractual liability (except product liability) for subcontractors to whom contract performance is lawfully delegated. Here, the principal cannot be held liable later if the subcontractor uses AI tools to perform the contract in a way that breaches the contract. Please note that negligence or intent are judged at the level of the AI deploying company, not at the level of the AI tool, which is not recognized as a person.

Limitation of liability is often dealt with in general terms and conditions ("GTC"). Please be aware that the enforceability of such clauses may depend on how carefully they were drafted. For example, limitation of liability clauses in GTC should be highlighted visually (e.g., by using bold letters and a bigger font size) and should explicitly state the extent to which liability is excluded, rather than simply referring to the applicable provisions of the law. In a business-to-business context, consider instead addressing liability in individually negotiated agreements with the customer itself (or to specifically refer to the GTC clause excluding/limiting the liability in the agreement or an order). In a business-to-customer context, GTC clauses that create an unfair imbalance of the rights and obligations of a consumer are unenforceable. Therefore, the extent to which liability can be legally limited for consumers depends on the overall arrangement of their contractual rights and obligations.

As of now, no specific rules exist in Switzerland for limiting liability of contract performance carried out by AI tools. If the AI-deploying company's contracts or GTC already contain clauses that generally limit liability, these clauses should also cover the future use of AI tools. Disclaimers that go beyond the limitations of contractual liability outlined above are not enforceable. Disclaimers or warnings can, however, still be useful in practice for managing customers' expectations – if applicable, in combination with disclosing that AI output is created automatically and not checked by a human prior to release (e.g., the output of a chatbot). Last, exposure to liability arising out of customers' interactions with the AI tool can be further reduced by contractually outlining in what way the customers are (and are not) authorized to use the AI tool. If such clauses are contained in GTC and limit the customers' rights considerably, however, also these provisions may be considered unenforceable.

Steps to Consider if You Want to Implement AI Services and Mitigate Liability Risks

Contributors: Sarah Drukarch (Partner), Markus Winkler (Counsel), Myrtha Talirz (Associate)

No legal or tax advice

This legal update provides a high-level overview and does not claim to be comprehensive. It does not represent legal or tax advice. If you have any questions relating to this legal update or would like to have advice concerning your particular circumstances, please get in touch with your contact at Pestalozzi Attorneys at Law Ltd. or one of the contact persons mentioned in this legal update.

© 2024 Pestalozzi Attorneys at Law Ltd. All rights reserved.